For the past years, Docker has been disrupting the Developers and DevOps field. Many people think Docker is a programming language or framework. But it is not. Docker is an open source platform for building, shipping and running containers. It is a tool that allows you to manage images and containers. Because of that, before we learn about Docker, it’s better if we learn about container first.

Containers

Container is a standardized unit of software allowing developers to isolate their app from its environment. By using the container, you don't need to think about the dependencies of your app clashing with each other anymore. In the container, every dependencies an app needed can be installed with it and also isolated in the container. By using it, we can guarantee an app that runs in one container can also run in the others.

Advantages of using container

So, why do we even use container? What benefits does it give to us? Well, to answer it simply, because of isolation and limitation.

By using the container, we can isolate specific apps with the other components in the host machine (The machine which we use the docker on). By default, the container won’t be able to access any of the host machine storage and the same thing applies vice versa. There are many things that isolation and limitation behavior solve, but let’s focus on two main things: dependency conflicts and limiting malware damage.

Avoiding dependency conflicts

I’m pretty sure everyone had experienced a dependency conflict on their personal computer. When you install a good deal of software in your PC, there is a chance of a conflict between the dependencies of the software.

For example, you want to install a new software called software A and software B. Software A requires you to have a python 2.1 installed within your PC, while software B requires python 2.3. When you’re installing Software A, it works perfectly. But, after you’ve installed software B, your PC replaces the python 2.1 with version 2.3. Because some features are different between the python 2.3 and 2.1, software A might not work anymore. This kind of thing often happens in the production server too. To make everything work perfectly, you’ll need to work around the dependency conflict.

We can avoid the dependency conflicts by using container in your app system. Containers are isolated with each other and even their host machine, so dependency conflicts are not possible to happen.

Limiting the damage of Virus

Accidentally or not, some software you use might contain a virus. If a software with virus is run without using any resource isolation, it will be able to access the whole host machine. If it's run inside a container though, it won't be able to access anything more than it allowed to. Because of that, you can think of container as a jail for the running process inside it. Within a container, the virus won’t be able to access files outside of the container. It also can’t access the hardware resources (CPU and Memory) easily because container can also limit the hardware resources container uses.

The differences between container and virtual machine

If you’ve been a developer for some time, chances are you’ve heard, or even use a virtual machine. Containers and Virtual Machine are pretty similar, they both isolate and limit a process within them. So, why use containers as opposed to virtual machine?

VM is a piece of software that is hosting a whole OS in it and runs on top of a physical machine called Hypervisor. While, container provides a isolated space that runs on top of its host OS.

The most important advantage of containers is the speed of initializing a new instance. Creating VM needs minutes because it needs to create a virtual disk, install OS, allocate resources, etc. Containers use your host machine’s OS, disk, ram, CPU, etc. This makes creating a container quick, usually in the matter of seconds.

Another reason is because of its portability. In docker we can use the image for packaging the data of a container (we’ll learn it later in this article). For VM though, if you want to package it, you’ll need to wrap the whole OS with its system data, producing a package with enormous size and is not really portable.

One thing to note is that nothing wrong with containers inside of VM. Actually, many people use it like that. You can rent a VM inside a cloud server like GCP, AWS or DigitalOcean and run many containers inside it.

Docker Introduction

As we learn in the previous section, Docker is an open source for Linux distribution. Docker run on top of Linux OS by default, even on Windows and MAC. Because of that, when we install Docker on Windows or Mac, Docker will ask you to install a Linux VM so it can run on top of it.

Note: Currently Docker is not supported in Apple's new M1 CPU architecture. There is a developer preview, but it isn't stable yet.

Hello world with docker

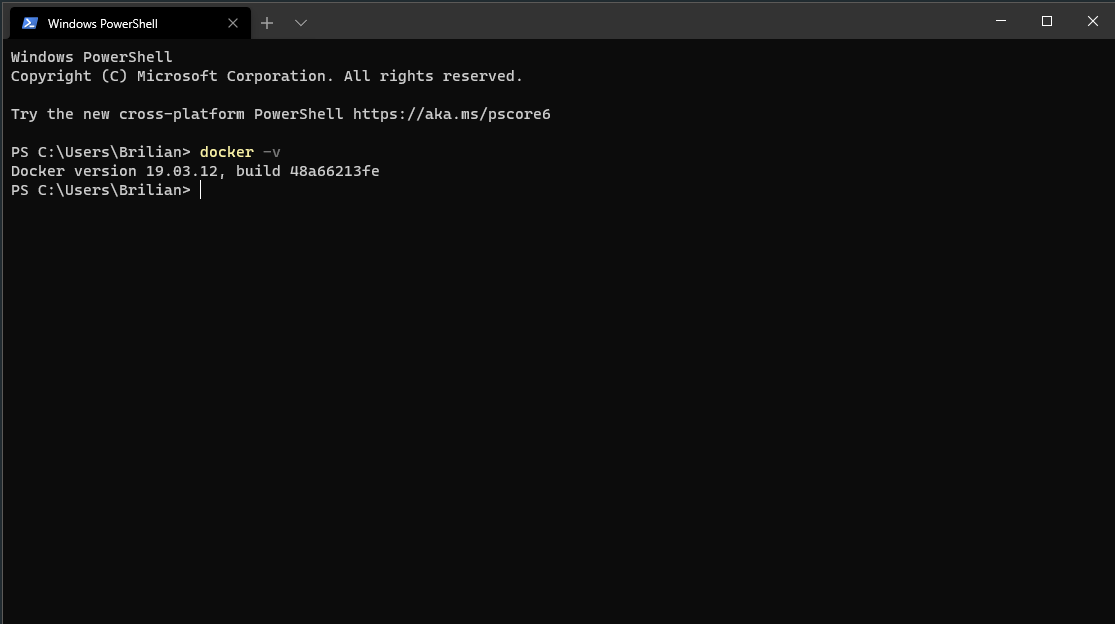

For this section, you'll need to first install Docker Desktop from https://www.docker.com/products/docker-desktop. After you've done installing it, you can verify your installation with command docker -v. In my case, I'm using windows and my installed docker version is 19.03.12.

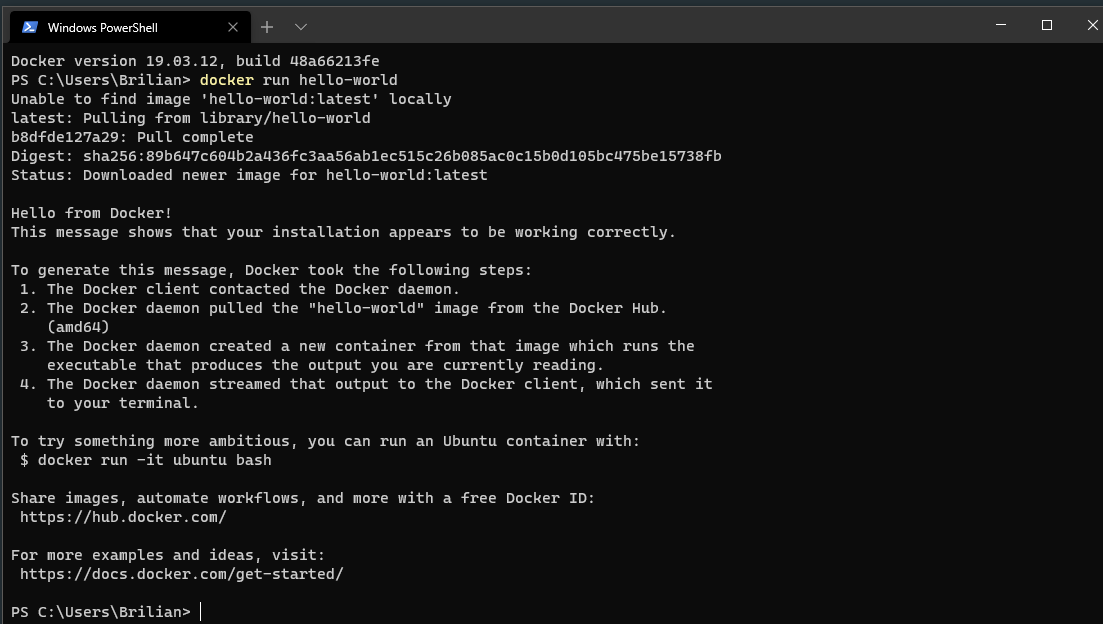

After you’ve successfully installed Docker, you can try running a hello world docker image with docker run hello-world. You should get the result like below:

So, what do you think about the result? Have you noticed the line Unable to find image ‘hello-world:latest’ locally? What actually happened when we run the previous command?

The first thing you need to know is docker image. Docker image are basically a snapshot of an container that has been configured previously. In the hello world’s image case, it’s configured to show the message as soon as the container is started.

So, what happened when we run the command?

- Docker get the

docker run hello-worldcommand. - Docker will check whether the

hello-worldimage is in the image cache. - Image cache return nothing, showing that the

hello-worldimage is not in the image cache. - Docker will check the Docker hub (Docker public repository) for

hello-worldimage. - Docker hub found an image tagged with

hello-world:latestname and return it to the Docker in your machine. Note that by default will get thelatesttag if you don’t specify the tag version. - Docker will then create a container from the image it gets, displaying the output in your terminal screen.

Docker basic commands

Now that we understand how to run an image with docker run, let's learn some basic commands of Docker.